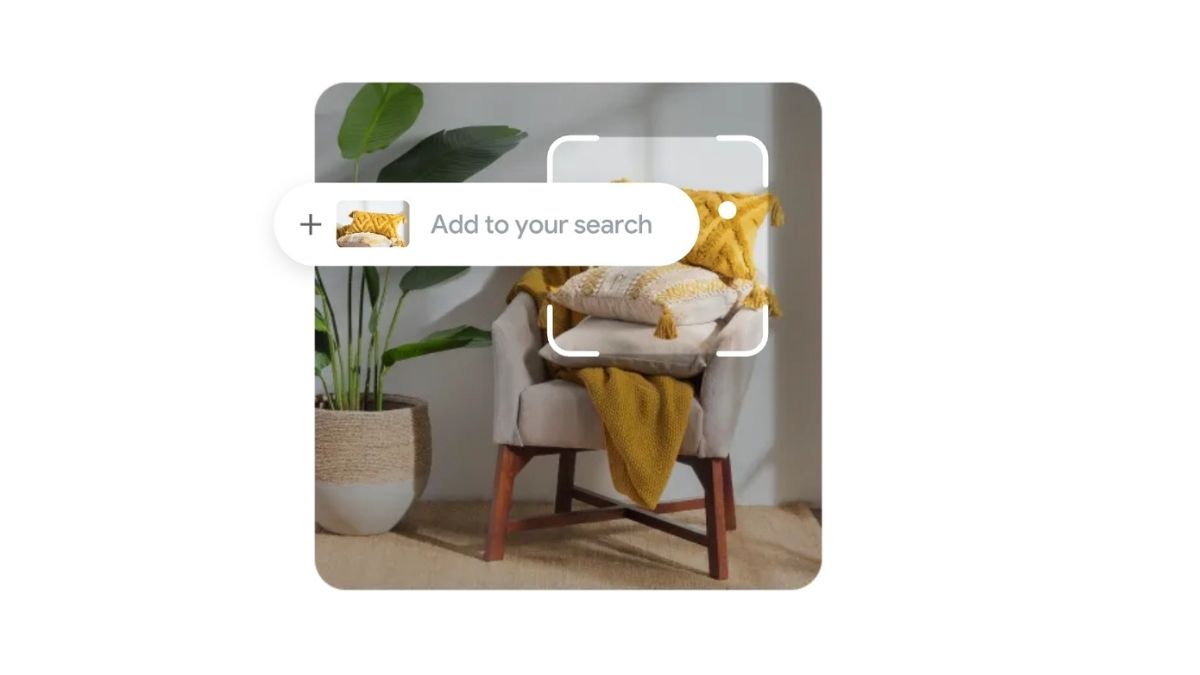

JAKARTA Google Lens is a very useful feature for searching for images through the search of related objects. Unfortunately, image browsing results often deviate because of the lack of explanation. Therefore, Google plans to launch a new feature in Lens so that the optical recognition tool (OCR) can receive additional contexts. This new feature will clarify the object being searched for so that the search results can be much better. Found by Android Authority, this new feature is added into the Google app version 15.22.29.arm64. This feature will allow users to include queries or brief instructions via sound. To use this new feature, users only need to open the Google Lens page and press the Shutter button in the middle of the screen. After that, hold the sound until Google asks for orders, then input an additional context of the image entered. After the command is processed, the search results from Google Lens will be more specific than before. For example, you photographed one of the bags and added the context of the brand name. The search results will show the bag of the associated brand.

SEE ALSO:

Additional query features only serve for browsing using images. However, Android Authority found additional strings that read, "Explore how people use Lens. Search with video. Hold on to record videos and melt them." It looks like this feature will also be added to the search using video. Keep in mind that these two features are not added to Google Lens. Users can only find this feature in Lens for Google Search. Both features are still developed and it is not yet known when to launch. Given that this feature is still being tested, there is a possibility Google will cancel launching its features or let the feature beta for a long time.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)