JAKARTA - Generative artificial intelligence (AI) technology, which can create and analyze images, text, audio, videos, and others. Now even more widely used in the world of health, driven by Big Tech companies and startups, which continue to grow.

Google Cloud, Google's cloud computing service and product division, in collaboration with Higher Health, a nonprofit health care company based in Pittsburgh, is now developing a generative AI tool designed to personalize the patient's admission experience.

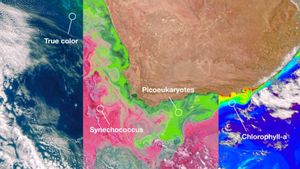

Amazon's AWS Division also said it was working with unnamed customers to use a generating AI in analyzing medical databases for "health social determinants."

Microsoft Azure also helps build a generative AI system for hearses, a non-profit health service network, to organize messages sent by patients to care providers.

Several leading AI startups in the health sector include Ambience Healthcare, which develops a generating AI application for medical personnel; Nabla, an environmental AI assistant for practitioners; and Abridge, which creates analytical tools for medical documentation.

However, both professionals and patients are hesitant whether the generative AI focused on health is ready to be widely used.

Andrew Borkowski, chief AI officer on the VA Sunshine Healthcare Network, the US Veterans Department's largest health system, is unsure that the current use of a generating AI is appropriate. Borkowski warns that the implementation of a generating AI can be too early due to its "significant limits" and concerns about its effectiveness.

SEE ALSO:

Several studies have shown that there is truth to the opinion. In a study in the journal JAMA Pediatrics, OpenAI's ChatGPT-generative AI chatbot, which some health organizations have tested for limited use cases, was found to have made a mistake in diagnosing children's diseases 83% of the time. Likewise, when tested as diagnostic assistants, the OpenAI GPT-4 model was deemed inaccurate by doctors at Beth Israel 2002ess Medical Center in Boston.

Nonetheless, some experts argue that AI is being more and better off in this regard. A study from Microsoft claims that they managed to achieve 90.2% accuracy on four challenging medical tests and used the GPT-4. However, Borkowski and others reminded that there are still many technical and compliance hurdles that must be overcome before a fully reliable AI as a medical aid.

In a situation like this, appropriate rules and careful scientific research are very important. Until this problem is resolved and the appropriate protection is applied, the widespread implementation of medical-generative AI can potentially harm patients and the health industry as a whole.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)