JAKARTA - The UK communications regulator, Ofcom, said technology companies should focus on protecting children from harassment, torture, and pro-suicide content. These are his first steps as an online security enforcer.

Ofcom, which gained new powers when the Online Security Act took effect last month, said children were top priorities.

They say their role is to force companies, such as Facebook and Instagram owners, Meta, to address the causes of online damage by making their services safer.

However, they will not make decisions about individual videos, posts, messages, or accounts, or responding to individual complaints.

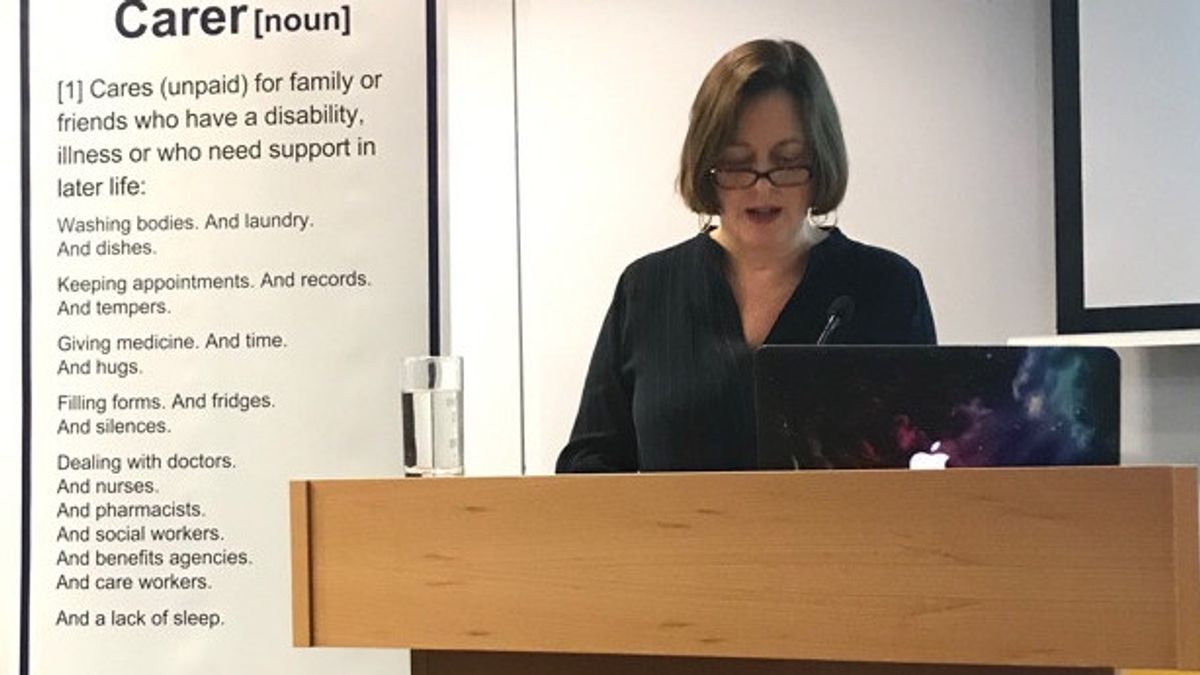

The Chief Executive of Ofcom, Melanie Dawes, said Ofcom would not waste time determining how they expect technology companies to protect people from illegal online damage.

SEE ALSO:

"Children have told us about the dangers they face, and we are determined to create a safer online life for young people especially," he said.

The code draft, published on Thursday 9 November, includes measures such as preventing users who are not on the list of children's relationships from sending messages to them and not making child location information visible.

They also said companies should use the so-called "hash matching" technology to identify illegal images of child sexual abuse by examining them against databases.

They said they would consult on their steps, which also include actions to counter fraud and terrorism, before issuing its final version next year, which will be subject to parliamentary approval.

If companies do not comply with the new law, they could be fined up to 18 million (Rp345.7 billion) or 10% of their annual global turnover.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)