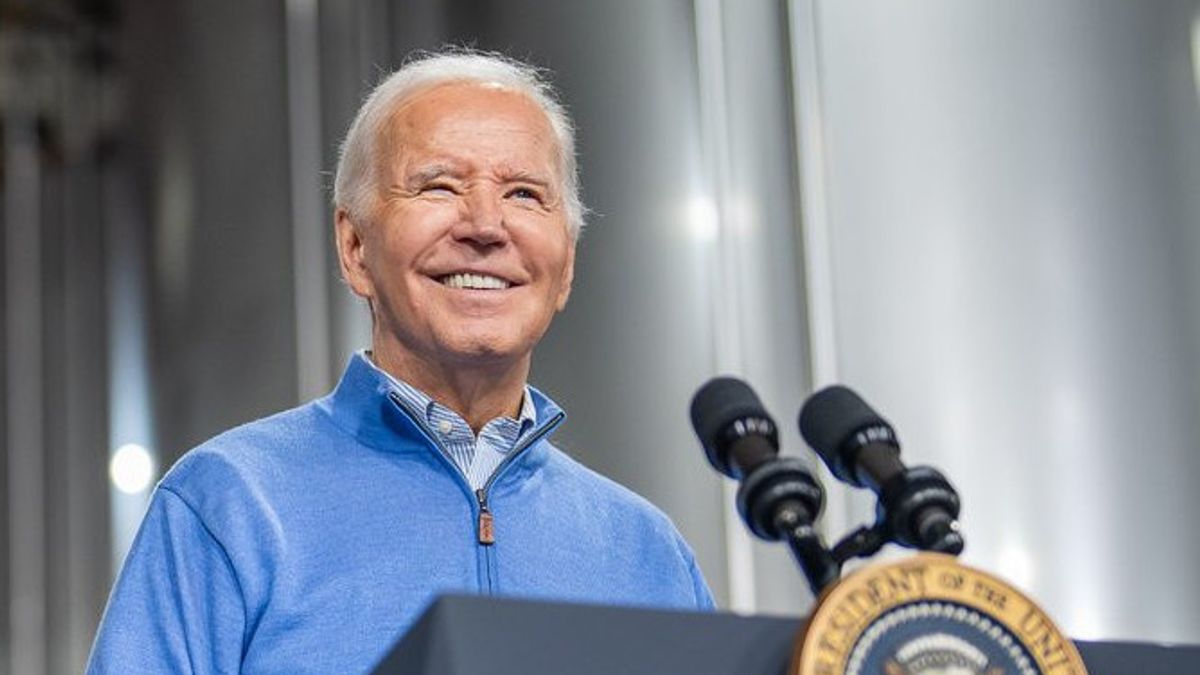

JAKARTA - Meta's Supervisory Board stated that a video on Facebook incorrectly accused US President Joe Biden of not violating the company's current rules. But they also criticized the rule as "inconsistent" and too focused on content generated by artificial intelligence (AI).

Complaints from users regarding the President's manipulated seven-second video forced the Supervisory Board to handle the case last October. The decision announced on Monday, February 5 was the first to respond to Meta's "manipulated media" policy, which prohibits certain types of manipulated videos, amid concerns about the potential use of new AI technology to influence this year's election.

The Supervisory Board stated that this policy "lacks of convincing, inconsistent and confusing reasons for users, and fails to clearly determine what damage to avoid".

The board advises Meta to update the rules to include audio and video content, regardless of whether AI is being used, and suggests implementing labels that identify them as manipulated content.

Nonetheless, the Supervisory Board does not push for the policy to apply to photos, warning that it may make the policy difficult to implement on a large scale of Meta.

Meta, which also has Instagram and WhatsApp, told the council during the review process that it plans to update the policy "responding to increasingly realistic new AI developments," in accordance with the decision. The company stated that it was reviewing the decision and would provide a public response within 60 days.

The manipulated video on Facebook shows Biden's real footage exchanging "I've Voted" stickers with his grandson during the central election for the 2022 period and kissing him on the cheeks. The same version of the video has gone viral since January 2023, the Supervisory Board said in its decision.

관련 항목:

In its decision, the Supervisory Board stated that Meta has correctly allowed videos to remain in line with current policies, which prohibit manipulated videos by misleading means only if produced by artificial intelligence or if it makes people seen saying words that are never actually spoken.

The board states that altered content without using AI "a lot happens and is not always less misleading" than content generated by AI tools.

The Supervisory Board suggests that the policy should also apply to content, only audio and videos that describe people doing something they never really did. Enforcement, according to them, should be in the form of labeling the content rather than the current Meta approach that removes shipments from its platform.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)