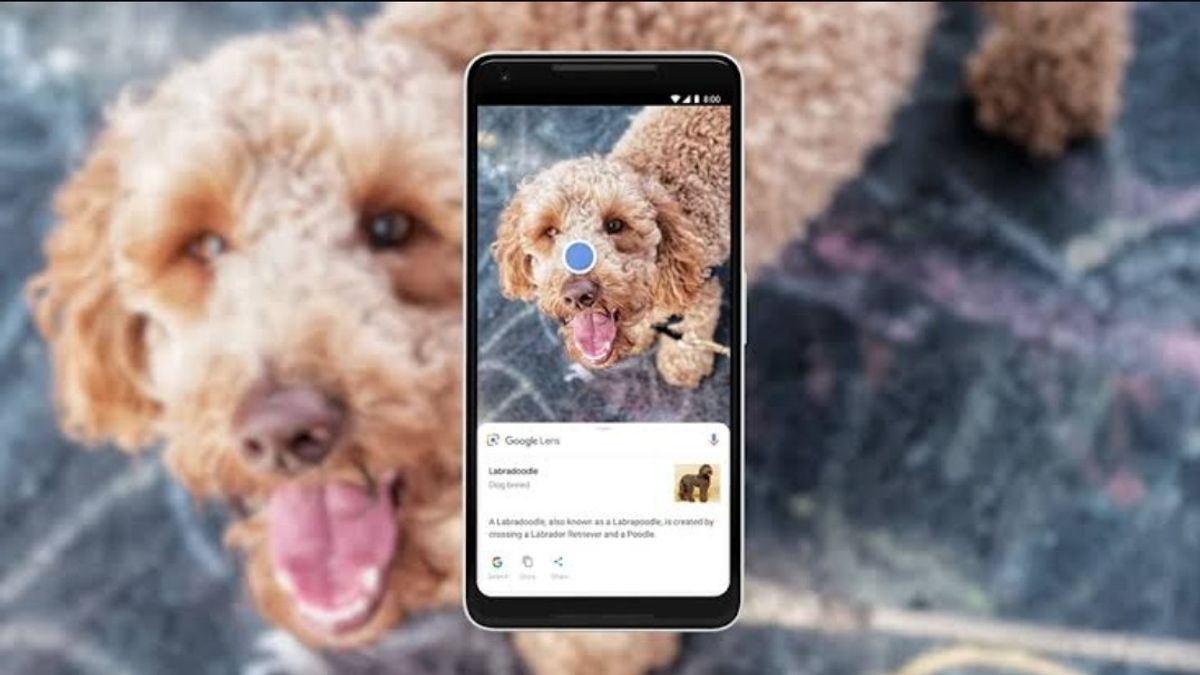

JAKARTA After conducting the testing process last June, Google finally launched an object search feature on Google Lens using video support. This launch was carried out on Monday, August 5. This new feature will make it easier for users to take pictures of real objects and trace them quickly. Given that this feature relies on videos, users must speak up so that Google Lens can understand the context to be searched for. Before the search feature with the video is launched, Google Lens users must include additional queries in the Multisearch column so that the search results can match context. This query addition can only be done once a search is carried out. To speed up object tracing, users can now image and add context quickly. When using this feature, users will see a caption that reads, "Talk now to ask about this image." This feature is already available on Google Lens, Google apps, and Chrome. To use Google Lens video search features, users can open the Lens search page, then click the Search menu with Your Camera.

SEE ALSO:

After that, point the camera at the object you want to take, then tap and hold the shutter button until the command to talk appears. Users can directly include additional information so that the search results can be more specific and appropriate. As long as the user is talking, the text will appear on the screen. For now, Google Lens can only process the sound in English. If the user only mentions the product name or brand, Lens can also process the query. Tracking objects with videos is already available on all Android devices because this update is released from the server side. If Chrome or Google Lens users have not received this update, make sure the application used is already the latest version.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)