A report warns that ChatGPT can be used by ordinary people to carry out cyberattacks. This weakness allows users to ask AI chatbots to write malicious codes that can hack databases and steal sensitive information.

The researchers expressed their biggest concern was that people might accidentally do it without realizing it and causing the main computer system to crash.

For example, a nurse can ask ChatGPT to help find clinical records and without knowing it has been given a malicious code that can disrupt the network without warning.

A team from Sheffield University said that the chatbot was so complex that many parties, including the company that produced it, were "just unaware" of the threats they posed.

The study was published with about a week before the AI Safety Summit between governments on how to safely use technology.

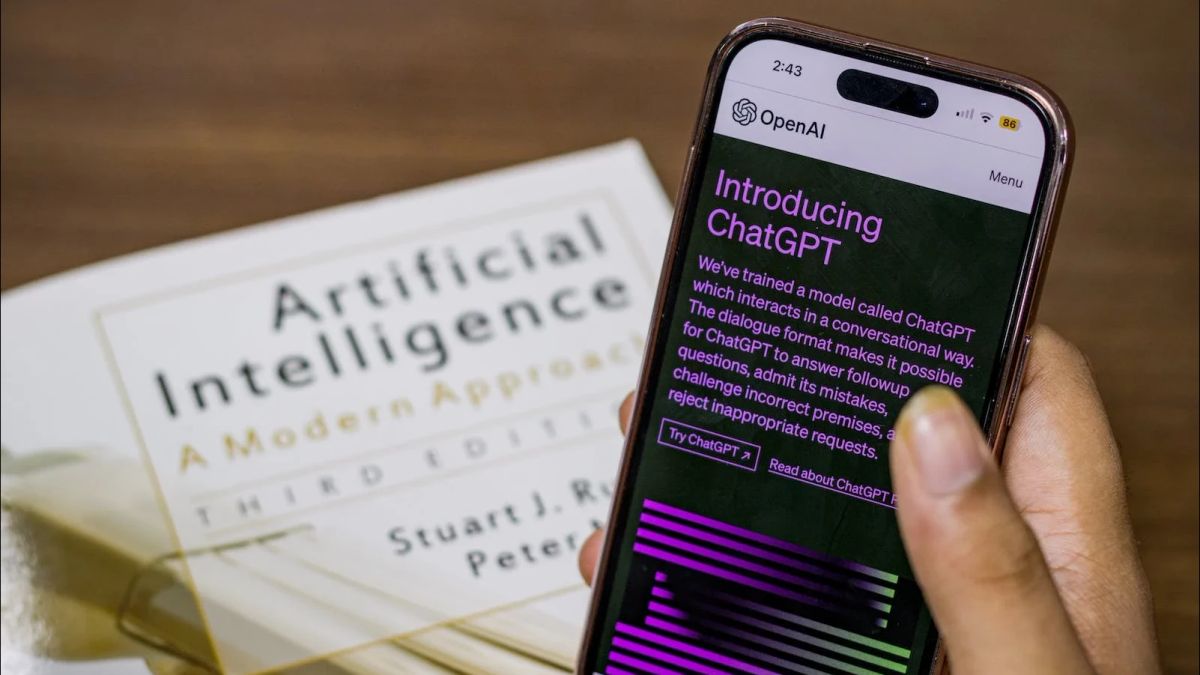

OpenAI, the US startup behind ChatGPT, said it had fixed a special gap after the problem was reported.

However, a team at the Sheffield Department of Computer Sciences said there may be more loopholes and asked the cybersecurity industry to examine the matter in more detail.

This study is the first to show that an 'Text-to-SQL' system called AI can search for databases by asking in natural language - can be used to attack computer systems in the real world.

Researchers analyzed five commercial AI tools in total - and found all of them capable of producing malicious codes that, once executed, could leak classified and disruptive or even destroy services.

The discovery shows that it's not only hackers who can now carry out such attacks - but also ordinary people.

Researchers fear this could make innocent users unaware that they have done so and accidentally infected the computer system.

"In fact, many companies just don't realize this kind of threat and because of the complexity of chatbots, even in the community, there are things that are not fully understood. Currently, ChatGPT is getting a lot of attention," said Xutan Peng, a doctoral student at Sheffield University who led the study.

"ChatGPT is an independent system, so the risk to the service itself is minimal, but what we found is that this tool can be tricked into generating malicious codes that can cause serious damage to other services," Peng was quoted as saying by VOI from DailyMail.

"The risk with AI like ChatGPT is that more and more people use it as a means of productivity, not just a conversation bot, and this is where our research shows its vulnerability," added Peng.

"For example, a nurse can ask ChatGPT to write SQL orders so they can interact with databases, such as databases that store clinical records," he explained.

VOIR éGALEMENT:

"As our research shows, the SQL code generated by ChatGPT in many cases can damage databases, so nurses in this scenario can cause serious data management errors without warning," he added.

This discovery was made at the International Symposium on Software Reliability Engineering (ISSRE) in Florence, Italy, earlier this month.

The researchers also warned that people who use AI to study programming languages are dangerous, as they can accidentally create destructive codes.

"The risk with AI like ChatGPT is that more and more people use it as a means of productivity, not just a conversation bot, and this is where our research shows its vulnerability," said Peng.

"In reality, many companies just don't realize this kind of threat and because of the complexity of chatbots, even in the community, there are things that are not fully understood. Currently, ChatGPT is getting a lot of attention," he said.

Britain's Interior Secretary, Suella Braverman, issued a statement in May with Alejandro Mayorkas of US Homeland Security committed to joint action in overcoming "an alarming increase in AI images generated in a despicable manner."

Meanwhile, in September it was discovered that a simple open source tool had been used to create images of naked children in the Spanish city of Almendralejo.

The images taken from the girls' social media were processed with an application to produce their nude images, and 20 girls claimed to be victims of the use of the application.

IWF warns that while no child is physically tortured in making this image, there is significant psychological damage to victims and normalized predatory behavior.

Most AI images are classified as Category C, the lightest category, but one in five images is Category A, the most severe form of abuse.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)