JAKARTA - Meta Platform Inc., has announced details about the next generation of artificial intelligence accelerator chips developed internally by the company. This new chip, called "Artemic" internally, is expected to help Meta reduce its dependence on artificial intelligence chips from Nvidia and reduce overall energy costs.

"These chip architectures are fundamentally focused on providing a precise balance between computing, bandwidth memory, and memory capacity to serve ranking and recommendation models," Meta wrote in a blog post.

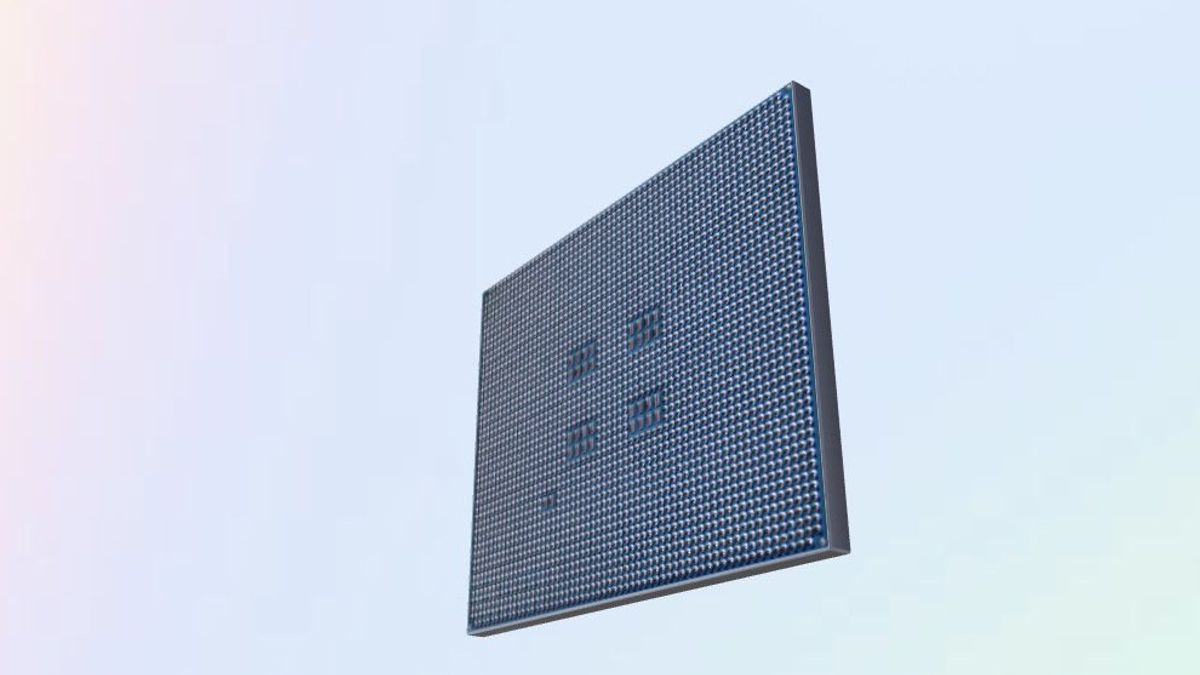

The new Meta Training and Inference Accelerator (MTIA) chip is part of a dedicated effort to develop custom silicon at the company which also includes examining other hardware systems.

In addition to building chips and hardware, Meta has also made a major investment in the development of the software needed to make the most efficient use of their infrastructure power.

SEE ALSO:

Meta also spent billions of dollars on Nvidia chips and other artificial intelligence chips. Meta CEO Mark Zuckerberg stated that his company plans to buy around 350.000 H100 flagship chips from Nvidia this year. With other suppliers, Meta plans to accumulate equivalent to 600,000 H100 chips this year.

Taiwan Semiconductor Manufacturing Co will also produce this new chip using the "5nm" process. Meta says that this chip is able to provide three-fold performance from its first-generation processor.

The chip has been implemented at the data center and is involved in serving artificial intelligence applications. The company says it has several ongoing programs aimed at expanding MTIA's coverage, including support for the workload of generative artificial intelligence.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)