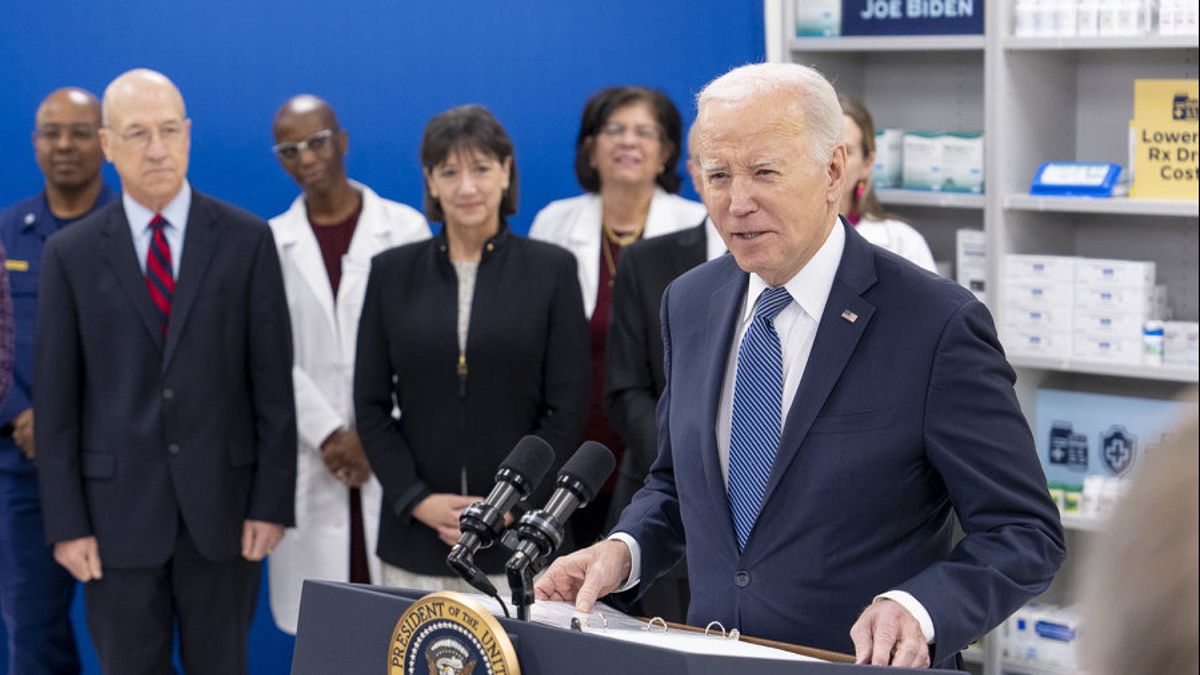

JAKARTA - On Tuesday, December 19, US President Joe Biden's administration announced the first steps towards establishing key standards and guidelines for the safe use of generative artificial intelligence and how to test and protect such systems.

The United States Department of Commerce's National Institute of Standards and Technology (NIST) stated that it is seeking input from the public until February 2 to conduct key tests that are critical to ensuring the security of artificial intelligence (AI) systems.

Commerce Secretary Gina Raimondo said the effort was prompted by President Joe Biden's October executive order on AI and aims to develop "industry standards around the safety, security and trustworthiness of AI that will allow America to continue to lead the world in the development and use of technologies that growing rapidly responsibly."

NIST is developing guidelines for evaluating AI, facilitating the development of standards, and providing a test environment for evaluating AI systems. This request seeks input from AI companies and the public regarding generative AI risk management and risk reduction of AI-generated information.

Generative AI – which can create text, photos and videos in response to open commands – has in recent months raised both excitement and fear that the technology could make some jobs obsolete, disrupt elections and potentially overpower humans with dire consequences.

Biden's order directs agencies to set standards for such testing and address risks related to chemical, biological, radiological, nuclear and cybersecurity.

NIST is seeking to establish testing guidelines, including where touted “red-teaming” would be most beneficial for AI risk assessment and management as well as establishing best practices for doing so.

External red-teaming has been used for years in cybersecurity to identify new risks, with the term referring to the United States' Cold War simulations in which adversaries were called "red teams."

In August, the first “red-teaming” event was held publicly in the United States during a major cybersecurity conference and hosted by AI Village, SeedAI, Humane Intelligence.

Thousands of participants tried to see if they "could make the systems produce undesired output or fail in other ways, with the goal of better understanding the risks faced by these systems," the White House said.

The event "demonstrates how external red-teaming can be an effective tool for identifying new AI risks," the statement added.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)