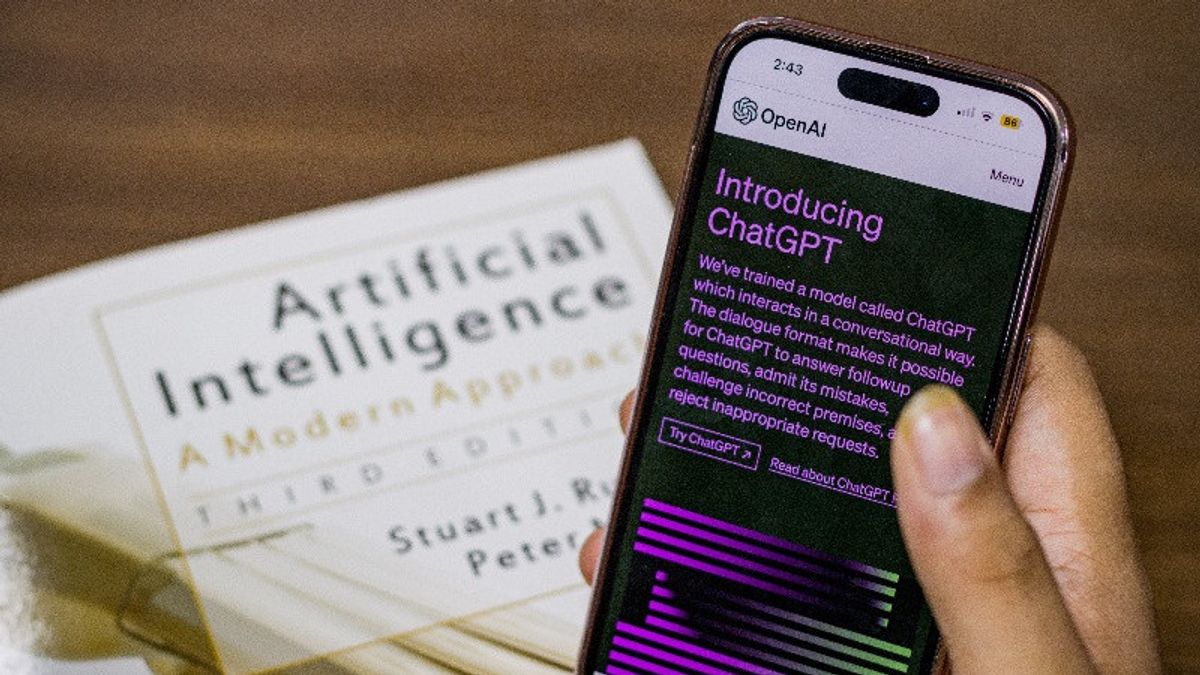

JAKARTA - Generative Artificial Intelligence (AI) innovations are increasingly popping up, such as OpenAI's ChatGPT and Google's Bard.

However, users who use AI chatbots actually have to be more careful with what they share, including personal data.

Google has just changed its application privacy policy where the company will use all public data and others on the Internet to train its Bard.

Unfortunately, there is no way to oppose Google changes other than deleting accounts. By looking at this, anything an online post user has ever been able to use to train Bard and other ChatGPT alternatives.

For this reason, the following are some examples of information that do not have to be shared with AI companies that are feared to be used to train their chatbots.

Personal Information That Identifies You

Try not to share personal information that can identify it is you, such as your full name, address, date of birth, and your social security number, with ChatGPT and other chatbots, which are claimed to be used to train their AI.

Given, OpenAI experienced a data breach in early May that may have caused your data to reach the wrong person.

User Name And Password

It should be noted, usernames and passwords can open unexpected doors, especially if you use the same credentials for multiple apps and services.

It is recommended that when users log in to the mobile version of the ChatGPT application, they do not need to use the same credentials as those used for other applications.

VOIR éGALEMENT:

Financial Information

There is no reason to provide personal banking information to ChatGPT. OpenAI will not need a credit card number or bank account details.

This is a very sensitive type of data. If there are apps claiming to be ChatGPT clients for mobile devices or computers asking for financial information from you, you might be dealing with ChatGPT malware.

Under any circumstances, you should not provide that data. Instead, delete the application, and get only an official generative AI application from OpenAI, Google, or Microsoft.

Company Secrets

Not long ago, Samsung became a victim of ChatGPT. Indeed, it was not a chatbot error, but their own employees who uploaded the code to the AI tool.

The code turned out to be confidential information that reached OpenAI's servers, resulting in Samsung imposing a ban on the use of any chatbot in the company.

Looking at this giant tech case, it means that you as a user must also keep your work secret. However, if you need ChatGPT help, you should find a more creative way to get it than leaking company secrets. This was quoted from BGR, Monday, August 21.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)