JAKARTA - Paedopoles is said to be using a popular new artificial intelligence (AI) platform to turn children's original photos intosexualized images.

This has triggered warnings for parents to be careful with pictures of their children posted online.

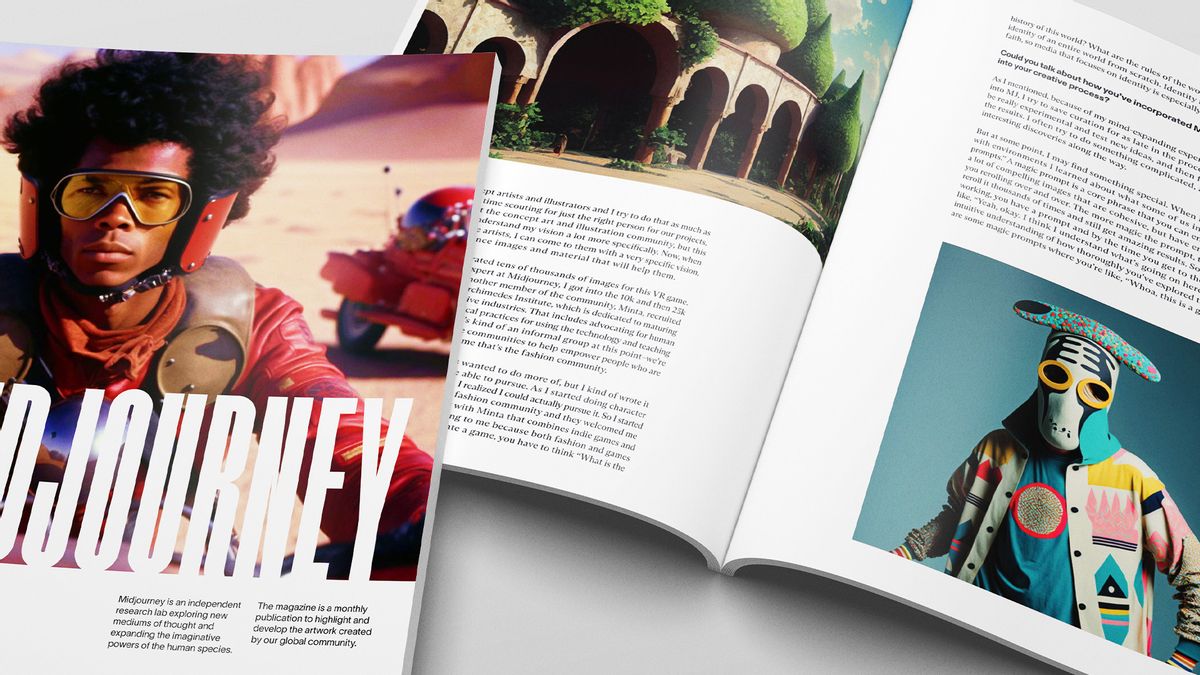

The images were found in the AI image generator, the US Midjourney, which is like a ChatGPT, using excitations to produce output, although it usually consists of images rather than words.

The platform is used by millions of people and has produced images that are so realistic that people around the world are fooled by them, including users on Twitter.

An image of Pope Francis wearing a large thick white jacket with a cross hanging around his neck made social media users go crazy earlier this year.

There are also false images of Donald Trump's arrest and 'The Last Supply Selfie' produced using the platform.

The program recently released a new version of its software that has improved the photorealism of its image, only increasing its popularity further.

An investigation by The Times found that some Midjourney users were making large numbers of children's sexualization images, as well as women and celebrities.

Among them are explicit deepfake images from Jennifer Lawrence and Kim Kardashian.

Users use the Discord communication platform to create stimulation and then upload the resulting images on the Midjourney website in the public gallery.

While the company says content should be 'PG-13 and family-friendly', the company has also warned that because of the new technology, 'doesn't always work as expected'.

However, explicit images found violate the requirements for the use of Midjourney and Discord.

Although virtual images of child sexual abuse are not illegal in the US, in UK and Wales content like this - known as a non-photographic picture - are prohibited.

NSPCC's head of online child safety policy, Richard Collard, said: "It is unacceptable that Discord and Midjourney are actively facilitating the creation and hosting of demeaning, abuse, and child-sexualized images."

"In some cases, this material will become illegal under British law and by storing child abuse content, they put children at very real risk of danger," Collard added, quoted by the Daily Mail.

"It is very sad for parents and children to have their image stolen and adapted by the perpetrators of the crime," he said.

"By simply posting images to trusted contacts and managing their privacy settings, parents can reduce the risk of images being used this way," he advised.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)