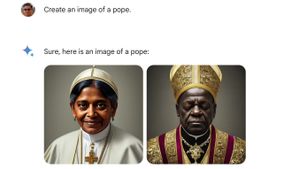

JAKARTA Gemini, Google's Artificial Intelligence (AI) model, has received criticism for inaccurate images or deviated from history. This AI image error alludes to racial issues.

One of Gemini's pictures in the spotlight was a search for the Nazi-era German army in 1943. The image is considered disappointing because it includes people with colored skin and women in army clothes.

According to John Lu, the user X who found the image, Gemini AI's mechanism was not carefully thought of. Most likely, Gemini's algorithm was designed for diversity, but Google ignored the topic of discussion.

Gemini's image problem, previously Bard, is increasingly being talked about. Not a few immediately tried and criticized the results so Google responded through their social media accounts.

관련 항목:

The Google Communications Team apologized. They admit that Gemini AI produces very diverse images. Unfortunately, this diversity is not accurate and even deviates from the history that has occurred.

The image of Al Gemini does produce a lot of people. And that's generally a good thing because people around the world use it. But it's not on target here," the Google Communications team said on Thursday, February 22.

The only thing Google can do right now is improve a learning algorithm from Gemini AI. The Google Communications Team stated that, "We are trying to immediately perfect this kind of depiction."

Previously, Gemini was known as Bard. This Google-made chatbot can produce AI images for free with a text input system. While still known as Bard, Google has never been criticized for its racist images.

It seems that Gemini's mistakes are widely discussed because Google is highlighting their AI. At least, with the emergence of this criticism, Google can improve and develop their AI learning algorithm.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)