JAKARTA - ChatGPT developer OpenAI is facing a lawsuit against defamation by Australian mayors. The startup claims some false information on the bribery scandal.

Mayor of the northwestern Melbourne, Australia Hepburn Shire Council, Brian Hood asked OpenAI to correct the information submitted by the AI platform.

Where, he was said to be guilty of a foreign bribery scandal involving a subsidiary of the Reserve Bank of Australia (RBA) when a user was looking for information about the case from the ChatGPT 3.5 device, which was released late last year.

In 2011, officials from a subsidiary of RBA, Note Printing Australia, were found guilty of conspiracy to bribe foreign governments.

The infringement took place between 1999 and 2004. Several officials were sentenced for their involvement in the crime.

While the fourth version of the latest ChatGPT, which was released last month, gave a better answer, appropriately explaining Hood is a whistleblower and citing a legal decision praising his actions.

According to Hood's attorney James Naughton, he only works for Note Printing Australia, and Hood is the one who reports paying bribes to foreign officials to secure a currency printing contract.

Hood was never charged with any crime. He said he was very surprised to learn that ChatGPT produced a misleading answer.

"I feel a bit numb. Because it was very wrong, very wrong, which made me staggered. And then I became very angry because of it," said Hood.

Naughton's lawyers further stated they had sent a warning letter to OpenAI on March 21.

The letter will give the company 28 days to correct misinformation about their clients, if they fail they can face lawsuits over defamation.

"This has the potential to be an important moment in the sense of implementing this defamation law into the new field of artificial intelligence and publications in IT spaces," said Naughton.

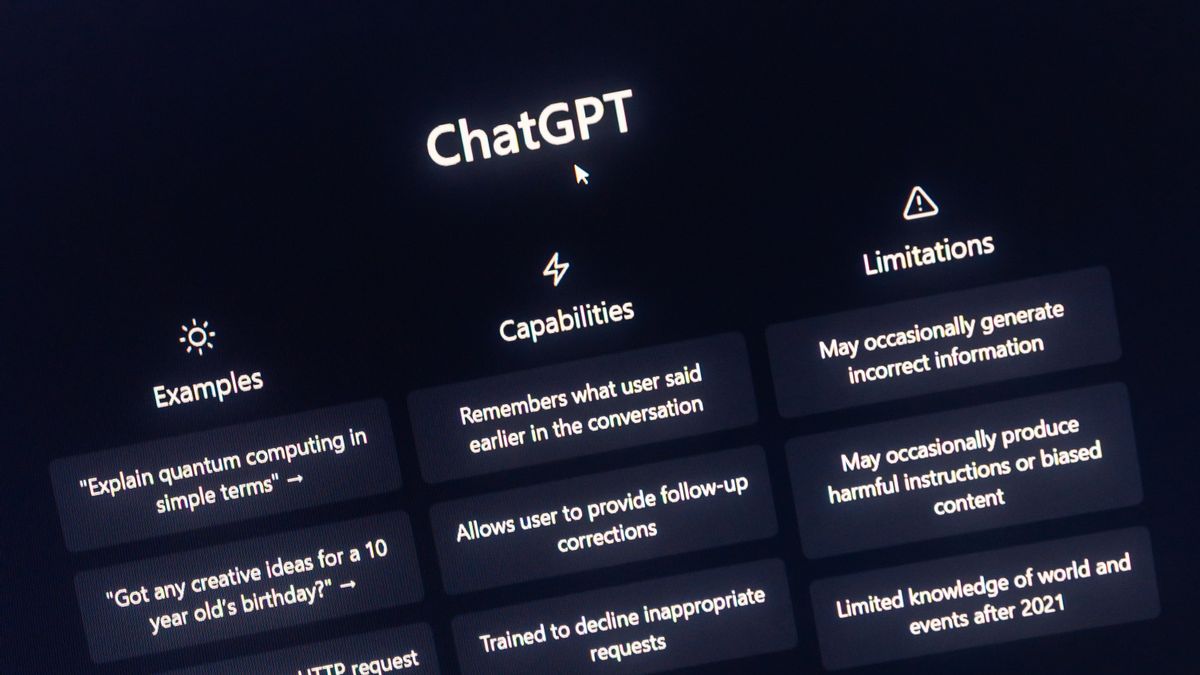

"He's an elected official, his reputation is very important for his role," he added. In fact, OpenAI has warned users that its AI chatbots can produce inaccurate information about people, places, or facts.

The company also said it was publicly releasing versions of chatbots that were not or were not perfect so they could research and fix the problem, as quoted from the Sydney Morning Herald, Thursday, April 6.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)