JAKARTA Meta is trying to provide a safe social media platform for teenagers by updating content policies. Through this policy, content will be presented according to teenagers' age.

Meta's first policy to announce is updating content recommendations on Instagram and Facebook. Automatically, these two platforms will put teens into very strict content control settings.

"We have implemented this arrangement for new teens when they joined Instagram and Facebook and are now expanding it to teens who are already using this app," Meta said in an official statement.

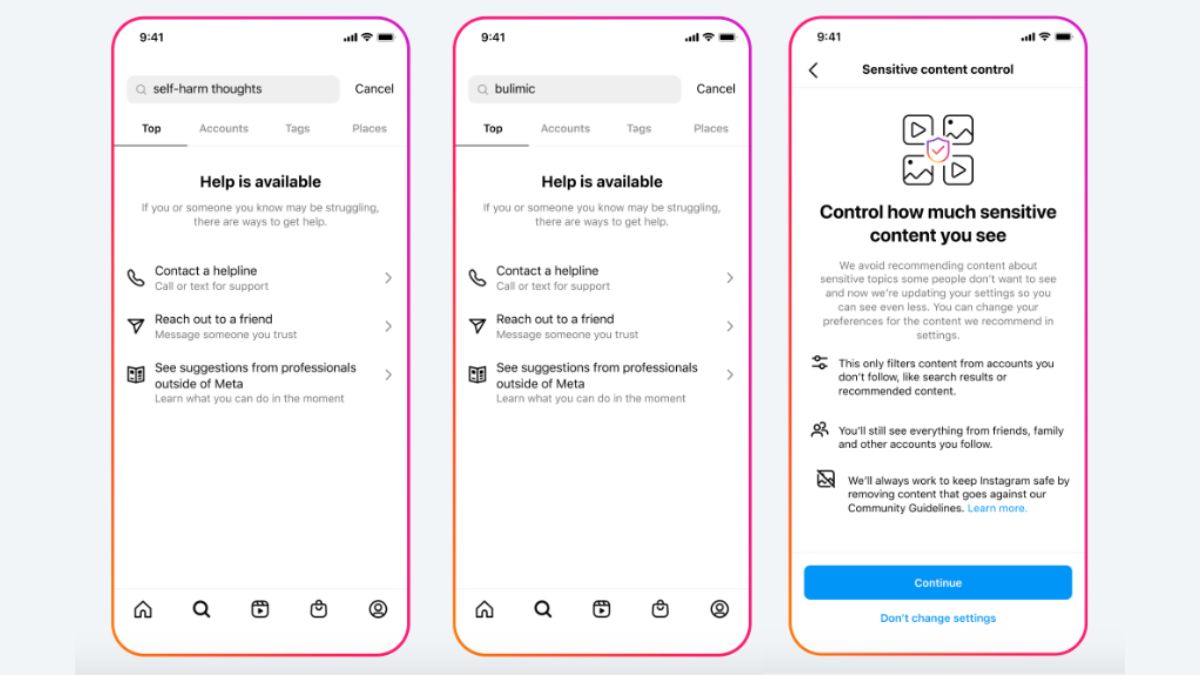

The control of recommendations for this content, called Sensitive Content Control on Instagram and Reduced Facebook, will make it difficult for users to find content that is prohibited for teenagers. Even though it is active automatically, this feature can be disabled.

Next, Meta will hide content about suicide, self-harm, and eating disorders. These three topics will be hidden for accounts registered on Instagram and Facebook as a teenager.

When teens search for these three topics in the search section, the two Meta-owned platforms will hide search results. Meta will also direct these teens to experts for them to get the right help.

SEE ALSO:

We have hidden the search results for the terms suicide and self-harm that violate our rules and we are extending this protection to include more terms, "explained Meta.

Meta's last content policy to add is an impetus to periodically check account security. Meta will provide notifications for updating account privacy settings in one tap.

When teens tap on the Recommended Settings option, the Meta system will restrict people who can re-upload, tag, or mention the account owner. Meta will also hide offensive comments.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)