JAKARTA - Mastodon, which is claimed to be suitable as an alternative social media replacement for Twitter, is actually filled with materials for Child Sexual Harassment (CSAM).

A new study conducted by Stanford University Internet Observatory found 112 CSAMs of content had been uploaded on 325,000 posts on the platform, in just two days.

Some others emerged after five minutes of searching. The researchers say the content is very accessible and can be searched for on the decentralized site.

Armed with the Google SafeSearch API, researchers were able to identify an explicit image assisted by PhotoDNA, a tool to help find the tagged CSAM.

During the study, they found 554 content suitable for hashtags or keywords in which perpetrators of sexual abuse of children were often used online. Claimed, they also formed a group to upload such content.

"We got more PhotoDNAs in a two-day period than we may have in the history of our organization in conducting any social media analysis, and that's not even close," said one of the report's researchers, David Thiel to The Washington Post, quoted by The Verge, Tuesday, July 25.

"Many of that are just a result of the lack of tools used by centralized social media platforms to address child safety problems," he added.

All of them were identified as explicit content in the highest trust by Google SafeSearch. There are also 713 uses of the top 20 CSAM-related hashtags in the Fediverse in posts containing the media.

They also found as many as 1,217 text uploads pointing to CSAM trading outside sites or child care.

Studies note, open post CSAM is very common. Launching Gizmodo, Fediverse is a loose constellation of platforms that avoid ownership and centralized governance for interactive models that prioritize user autonomy and privacy.

Fediverse runs on a series of open and free source web protocols, with anyone able to organize and host social communities through their own servers, or instances.

Thiel stated that the Fediverse platform is very vulnerable to this problem. "The central platform has the highest authority for content and has the ability to stop it as much as possible, but in the Fedive you only cut the server with bad actors and go on, which means the content is still being distributed and still harming the victim," he explained.

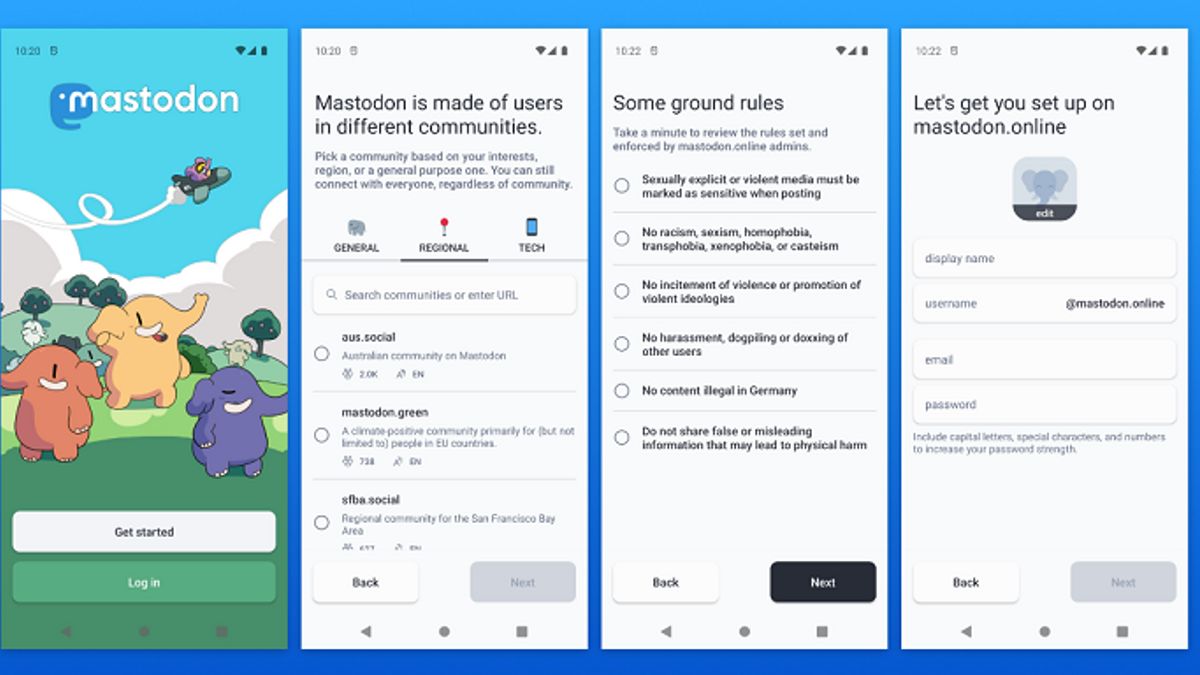

Decentralized platforms such as Mastodon are increasingly popular, as are concerns about security. Therefore, the researchers suggested that networks such as Mastodon use stronger tools for moderators, it would also be better if integrated with PhotoDNA and CyberTipline reporting.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)