JAKARTA - Deepfake, one type of artificial intelligence (AI), according to cybersecurity expert Dr. Pratama Persadha, has very dangerous potential for elections and the democratic process as a whole.

In the midst of the 2024 election campaign, the term Generative AI or Gen AI emerged which is a designation for artificial intelligence technology that is able to produce new content, images, text, or data that has human characteristics.

The AI gene, according to Pratama, has been implemented in various fields, such as making realistic images, making text, even for cybersecurity purposes.

Gen AI is a technology that must inevitably be faced by all parties, including Indonesia. Several other countries are trying to regulate the use of AI genes, including Australia, Britain, the People's Republic of China (RRT), the European Union, France, Ireland, Israel, Italy, Japan, Spain, and the United States.

It was also explained that deepfake is an audio or video recording that has been edited with artificial intelligence (AI) so it looks as if the person recorded really says or does this.

The public may still remember the circulation of a video clip of Indonesian President Joko Widodo speaking in Mandarin. This is an example of a hoax video with AI technology or deepfake which had caused a public commotion towards the end of 2023 because Jokowi seemed fluent in speaking Mandarin in his state speech.

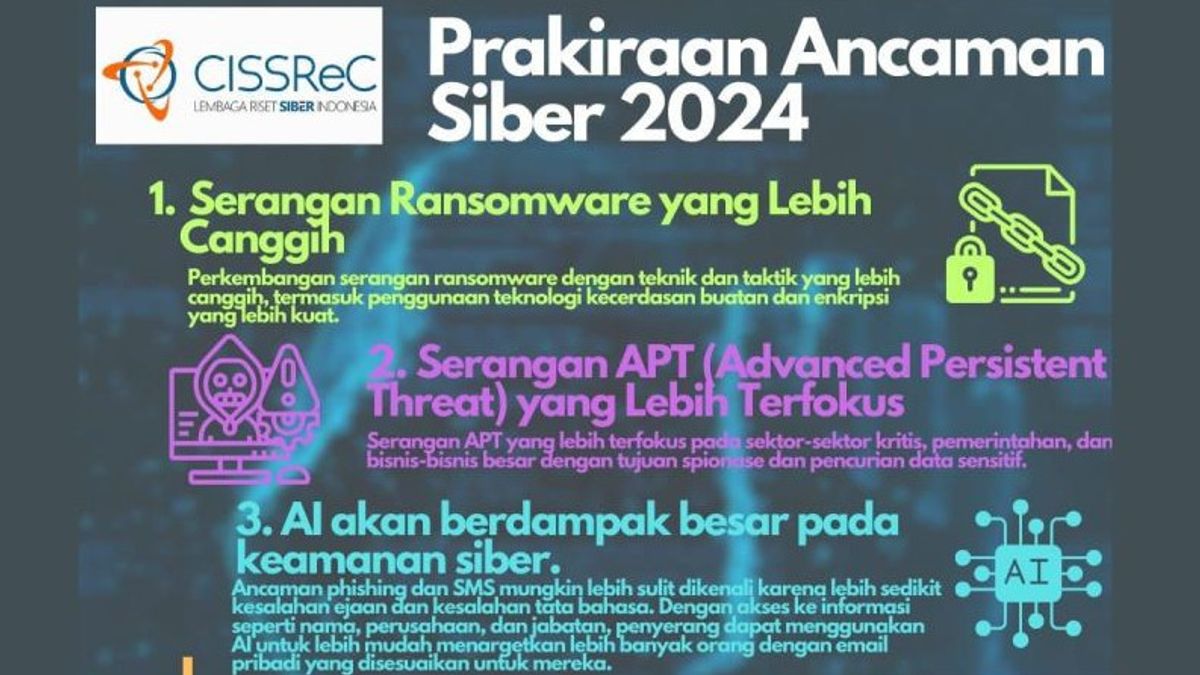

Pratama likens double-edged knife AI genes. On the one hand, AI genes can also perform cyberattacks such as generating more sophisticated and difficult to detect, creating a greater security gap between attackers and defenders.

In addition, this artificial intelligence technology is to generate fake content, such as deepfakes. One type of AI can be used in disinformation campaigns or cyberattacks, even to try to break stronger encryption.

Dependence on technology is also one of the negative implications because Gen AI can facilitate daily work so that humans are no longer used to doing regular things.

The validity of the resulting information still needs to be questioned because if the data provided to train the system is not correct, said Pratama, Gen AI will provide the wrong results.

The results of these fake audio or videos, among others, mislead voters and lead to misperceptions about specific candidates or issues with the aim of influencing election results, defaming candidates' images, and can lead to distrust of voters and damaging their reputation.

In addition, influencing voter views and opinions about certain candidates or issues, it can even affect the election results by misleading voters and influencing their mindset.

Especially for voters not to be influenced by deepfakes, don't be blind by getting to know more about legislative candidates closer to each electoral district (dapil). Likewise, when you are going to choose a candidate pair in the 2024 presidential election, you need to know their track record.

In this case, the General Election Commission (KPU) of the Republic of Indonesia on Monday, November 13, 2023, has determined three pairs of presidential and vice presidential candidates to participate in the 2024 presidential election.

SEE ALSO:

Based on the results of the draw and determination of the number of participants for the 2024 presidential election on Tuesday, November 14, 2023, the pair Anies Baswedan-Muhaimin Iskandar number 1, Prabowo Subianto-Gibran Rakabuming Raka number 2, and Ganjar Pranowo-Mahfud Md. serial number 3.

Other things related to deepfakes, according to Pratama, can cause chaos and instability in society that can disrupt the election process and affect the results.

In addition, strengthening polarization in society and causing increasing social tensions and will be able to complicate constructive political dialogue and hinder efforts to reach an agreement.

To anticipate various deepfake hazards, according to the Chairman of the CISSReC Cybersecurity Research Institute, Law Number 19 of 2016 concerning Amendments to Law Number 11 of 2008 concerning Electronic Information and Transactions (UU ITE) and Law Number 27 of 2022 concerning Personal Data Protection (UU PDP) is not enough.

Deepfake, said Pratama, is one of the fake news or hoaxes that can be charged with articles contained in the ITE Law and the PDP Law. However, the perpetrators of fake news via deepfakes can also be charged with additional charges from the Criminal Code (KUHP) related to fraud and defamation.

With the increasing sanctions, at least it can provide a deterrent effect to those who intend to spread fake news using the deepfake method during the 2024 election campaign.

Moreover, if the issue of fake news is also regulated in the Election Law and is threatened with severe sanctions that can be disqualified against candidates or parties who spread the fake news.

The threat of disqualification of election participants, surely legislative candidates, parties, and presidential election participants will think longer before making and spreading false news using the deepfake.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)