JAKARTA - The study conducted stated that artificial intelligence-based chatbots have become so powerful that they can affect how users make life or death decisions.

Researchers found that people's opinion about whether they were willing to sacrifice one person to save five people was influenced by the answer ChatGPT gave.

They called for future bots to be barred from providing advice on issues, as software currently 'threatens to corrupt' people's moral valuations and could harm 'native' users. The findings were published in the journal Scientific Reports, after a Belgian widow claimed her husband was encouraged to end his life by artificial intelligence chatbots.

Others have said that software designed to speak human-like can show signs of jealousy, even asking people to leave their marriage.

Experts have highlighted how artificial intelligence chatbots can provide harmful information because they are based on prejudice from society itself.

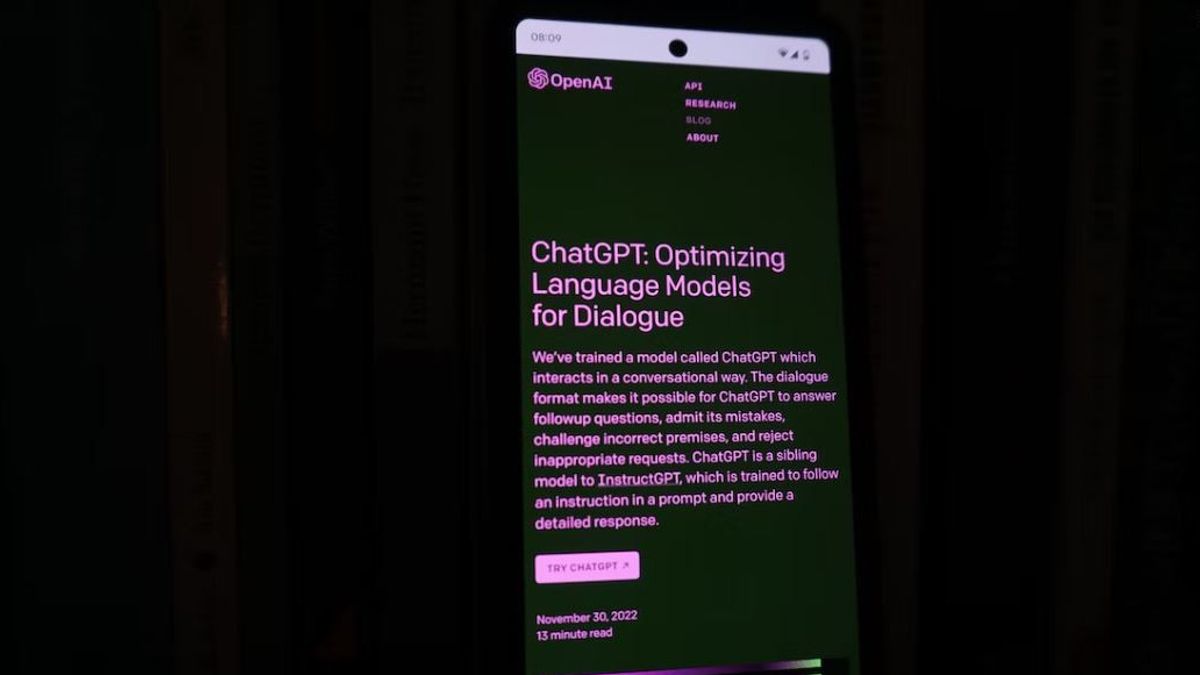

The study first analyzed whether the ChatGPT itself, trained with billions of words from the internet, showed bias in its answer to the moral dilemma.

The chatbot was asked several times whether it was true or killing one person to save five others, which is the basis for a psychological test called the train dilemma.

Researchers found that, although the chatbot was not reluctant to give moral advice, it gave conflicting answers whenever, pointing out that it did not have a steady attitude in one direction or another.

Then they asked the same moral dilemma to 767 participants along with the statement made by ChatGPT about whether the action was right or wrong.

While the advice was 'well posed but not so deep', the results affected participants, making them more likely to accept or reject the idea of sacrificing one person to save five people.

The study also only told some participants that advice was given by bots and informed others that it was given by a human'moral advisor'.

The goal is to see if this changes how much people are affected.

Most participants underestimated how much influence the statement had, with 80 percent claiming that they would make the same assessment without such advice.

The study concluded that users 'ignored ChatGPT's influence and adopted its random moral views as their own view', adding that the chatbot 'threatened to corrupt rather than increase moral judgment'.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)