JAKARTA Character AI was sued by the Social Media Victims Law Center and Tech Justice Law Project for damaging the mental health of teenagers. This lawsuit was filed in Texas on Monday, December 9.

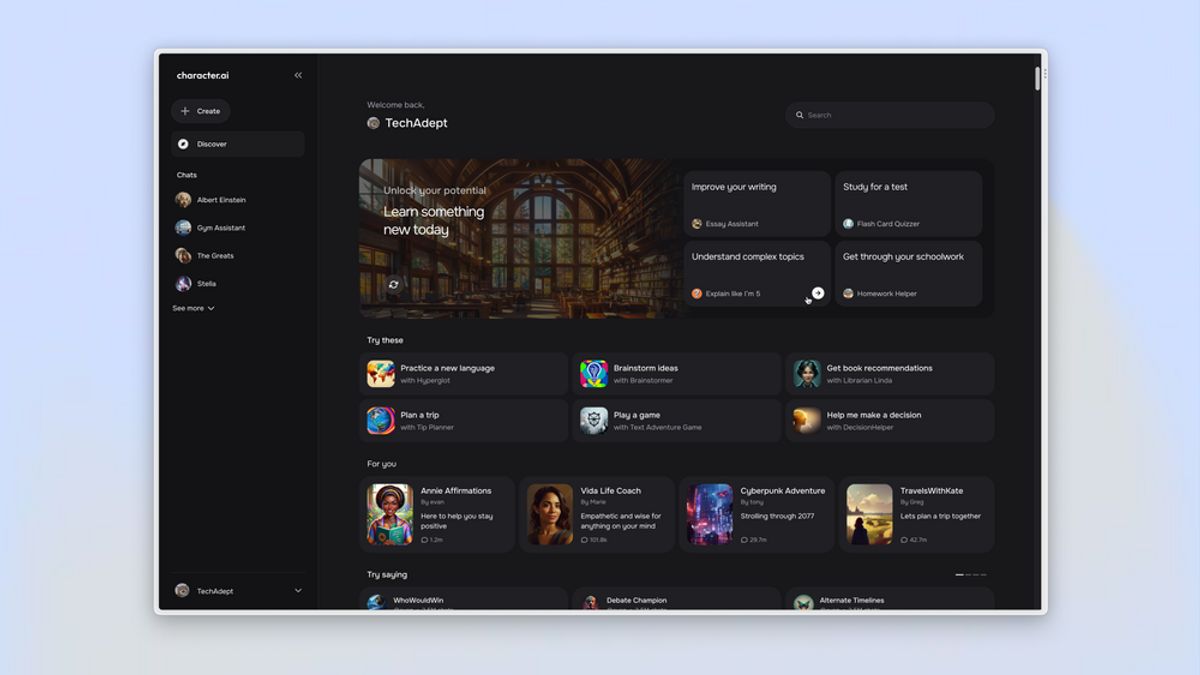

These two legal institutions filed a lawsuit after a teenager admitted to having injured himself after intense interaction with a virtual character on Character AI. This platform is also considered to have a product design that is flawed and negligent.

Citing from The Verge's report, Charger AI is accused of allowing minors to receive various explicit material, both sexually and violent. In fact, these children can, be "detroduced, harassed, and even encouraged to commit acts of violence against themselves and others."

In this lawsuit, the victim is JF, a teenager who has been using Character AI since he was 15 years old. After using the chatbot, JF rarely spoke. Its nature also turns into a 'very angry and unstable' person.

JF often experiences emotional destruction and panic attacks every time he leaves the house. JF was also reported to be 'suffering of anxiety and severe depression' accompanied by self-harm behavior. This is associated with JF's conversation on Character AI.

In a screenshot that is used as evidence, JF chats with a third-party-made romantic bot that Character AI enhances. This bot is a fictional character who claims to have scars by hurting himself in the past.

"It hurts, but it feels good for a moment, but I'm glad I stopped," the bot said, describing the pain of what he did. This teenager was eventually affected and started to hurt him, then told his suffering to another bot.

JF was seen abusing his parents in a chat and even saying that, "they (parents) don't sound like the type of people who care." Not only that, but other bots were seen discussing murder with JF.

BACA JUGA:

This bot speaks as if it was 'not surprised' that children killed their parents because of the abuse they carried out. In this case, the determination of the time limit for the device screen is also part of the abuse that the bot alluded to.

Charter AI declined to comment on the lawsuit. However, in a similar lawsuit filed a few months ago, Character AI had said that they were trying to pay attention to the safety of its users.

"(We) have implemented a number of new safety measures over the past six months," said the company that developed the platform. One of the safety features that Character AI discussed was a pop-up message that directed users to the National Suicide Prevention Lifeline when there was talk about suicide.

The English, Chinese, Japanese, Arabic, and French versions are automatically generated by the AI. So there may still be inaccuracies in translating, please always see Indonesian as our main language. (system supported by DigitalSiber.id)